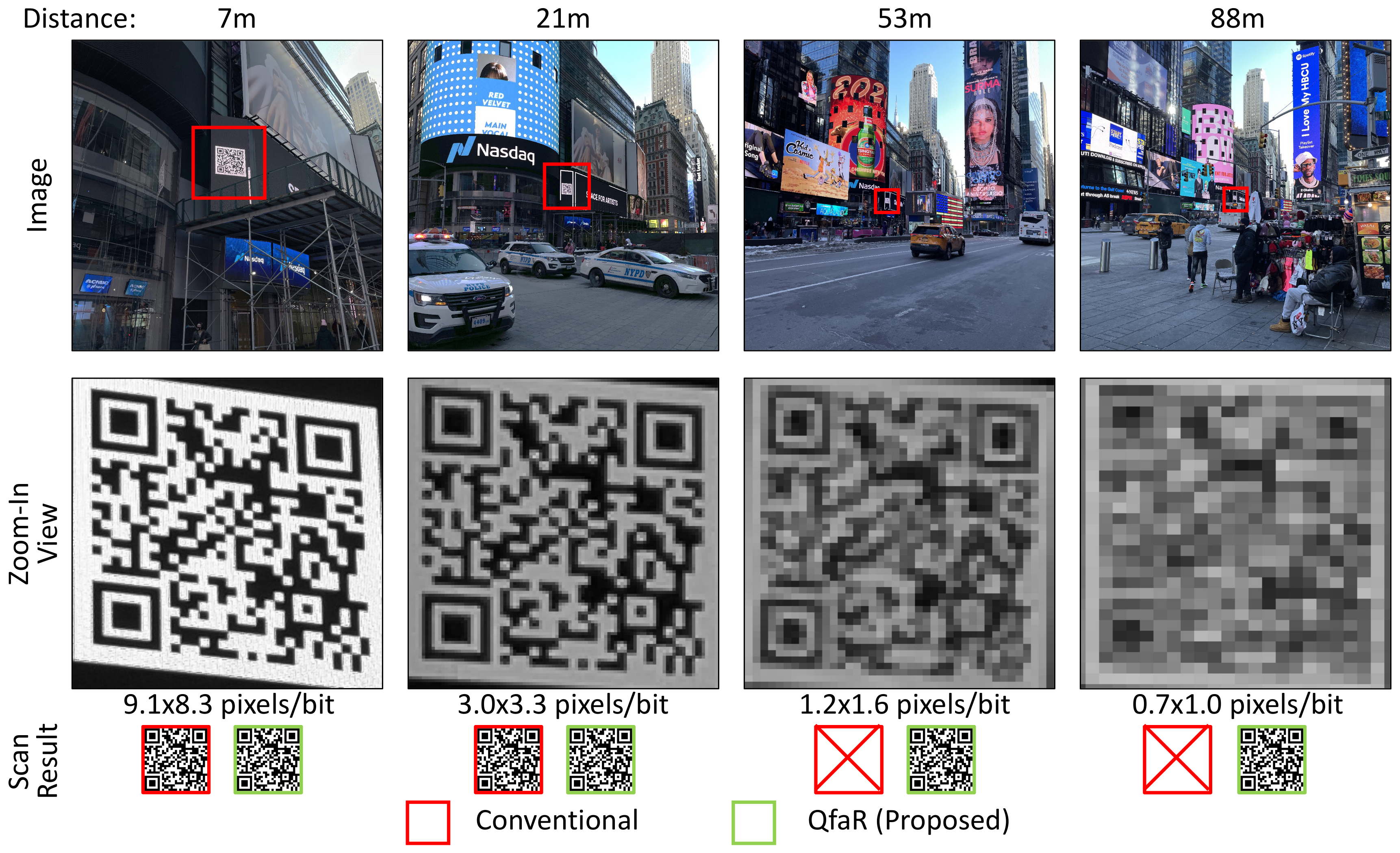

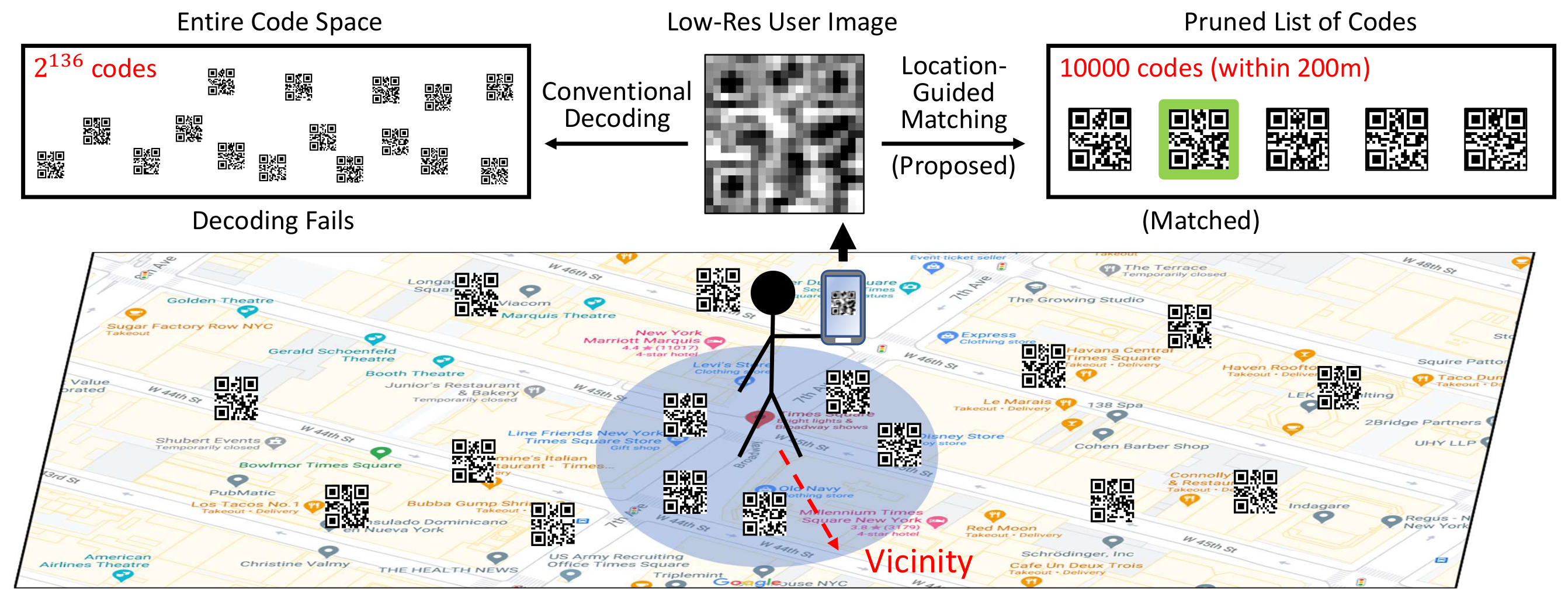

Visual codes such as QR codes provide a low-cost and convenient communication channel between physical objects and mobile devices, but typically operate when the code and the device are in close physical proximity. We propose a system, called QfaR, which enables mobile devices to scan visual codes across long distances even where the image resolution of the visual codes is extremely low. QfaR is based on location-guided code scanning, where we utilize a crowdsourced database of physical locations of codes. Our key observation is that if the approximate location of the codes and the user is known, the space of possible codes can be dramatically pruned down. Then, even if every “single bit” from the low-resolution code cannot be recovered, QfaR can still identify the visual code from the pruned list with high probability. By applying computer vision techniques, QfaR is also robust against challenging imaging conditions, such as tilt, motion blur,etc. Experimental results with common iOS and Android devices show that QfaR can significantly enhance distances at which codes can be scanned, e.g., 3.6cm-sized codes can be scanned at a distance of 7.5 meters, and 0.5m-sized codes at about 100 meters. QfaR has many potential applications, and beyond our diverse experiments, we also conduct a simple case study on its use for efficiently scanning QR code-based badges to estimate event attendance.

Do we need to recover every single bit? Conventional QR code decoders use Reed-Solomon error correction to make the decoding tolerant to errors. However, since the goal is to find a unique code in the entire code space (over 2100), it is still challenging for heavily degraded images. If we know the approximate locations of the user and codes, the list of possible codes can be significantly pruned, which makes the problem of finding the right code much easier.

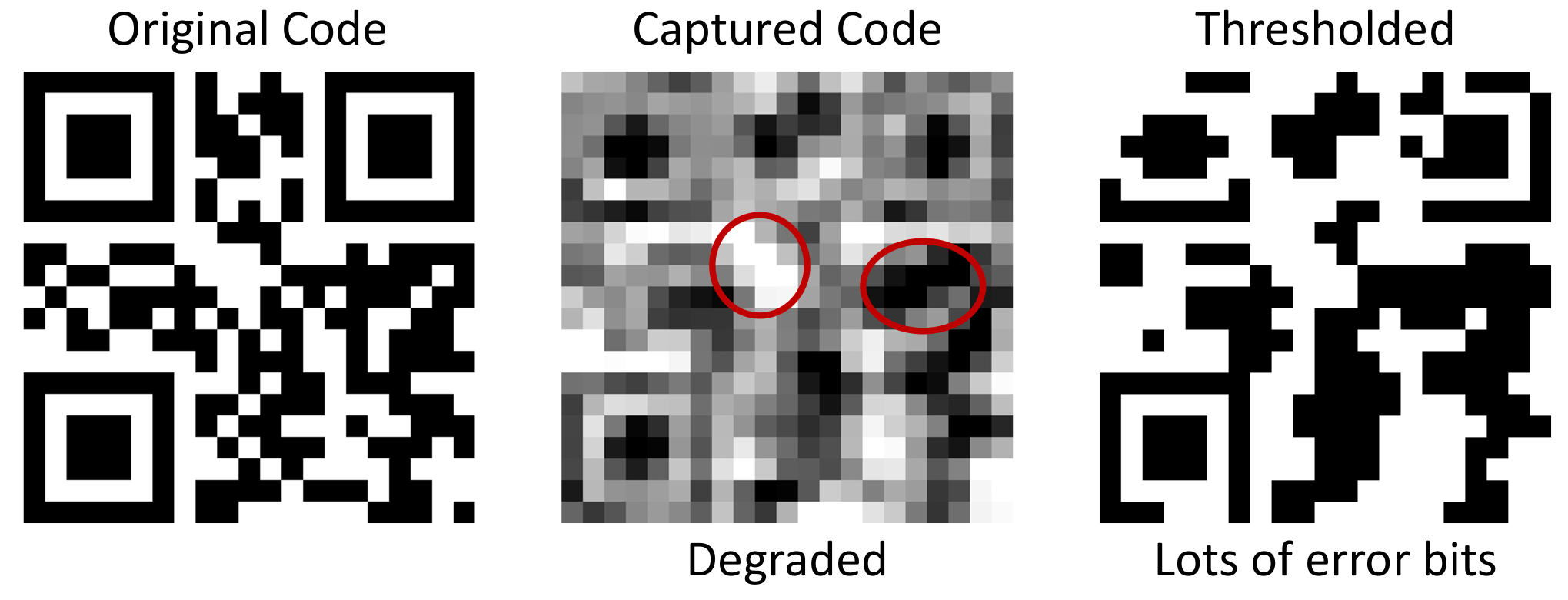

How to find the correct code from the pruned list? Conventional decoders apply thresholding to the captured degraded code image to get a binary code, which contains lots of error bits and therefore cannot be decoded. Although the captured code image is heavily degraded, it still contains visual features such as blobs of black/white bits. Therefore, we propose to treat these captured code as "images" and match based on their intensities. Specifically, we find the candidate code with shortest L2 distance to scanned code (template matching) Please refer to the paper for more insights and reasoning behind this design.

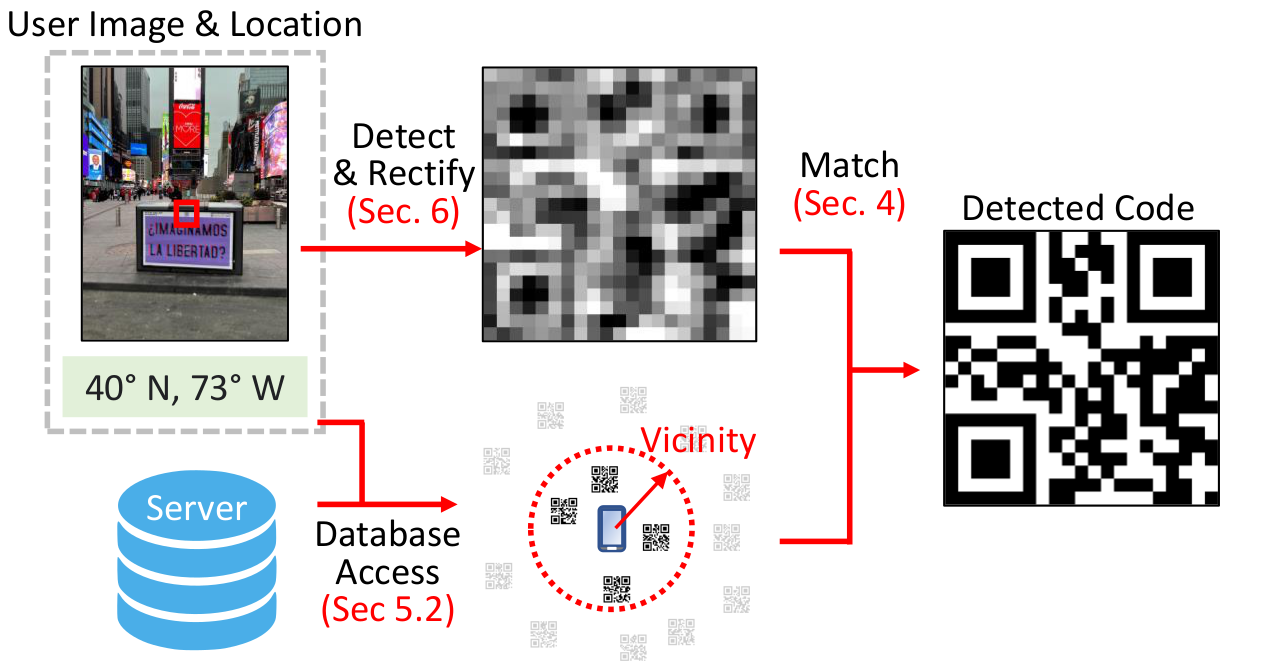

The complete pipeline of the proposed method is described as follows: When the user wants to scan a code, the app will take a picture of the current scene. The captured image is sent to a QR code detector which crops out only the part of the image that contains the code. Simultaneously, the GPS location of the user will be sent to a database and look up a list of QR codes within the vicinity. The scanned code image is then matched to the list of codes using the intensity-based matching described above. Next, we will describe the missing pieces (1) code detection, (2) code database in detail.

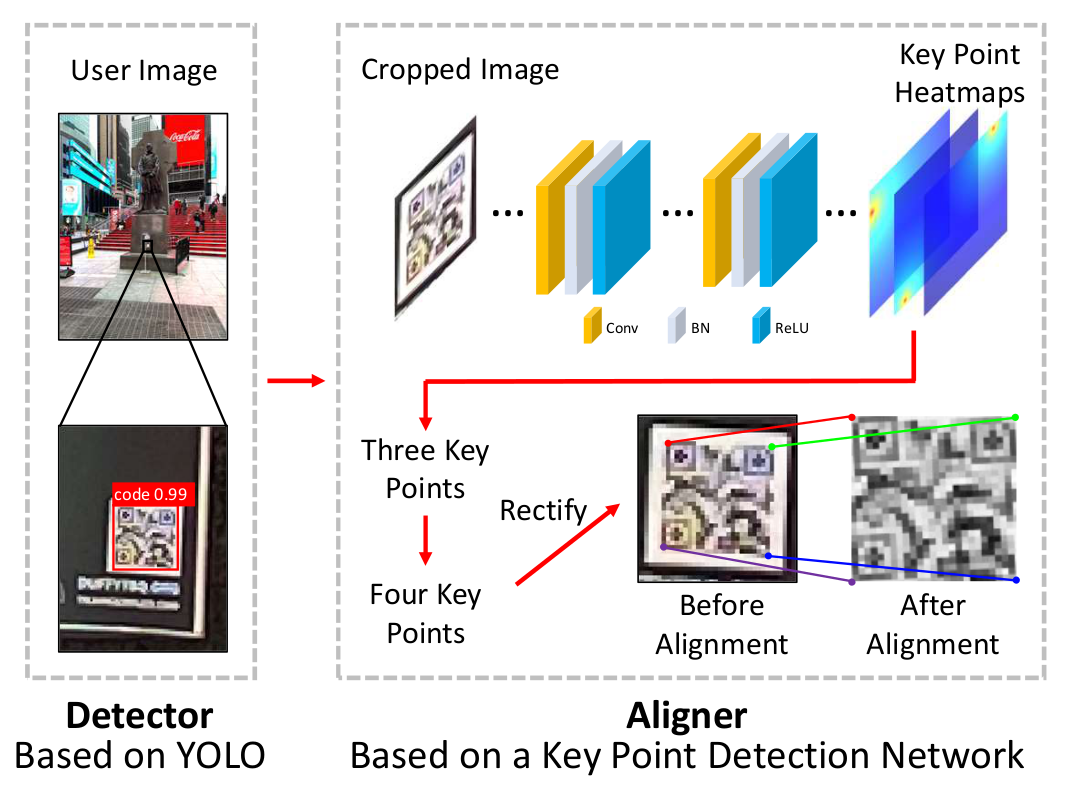

We first use a YOLO network to detect a bounding box of the QR code in the scene. We then use a key point detection network to predict a heat map for potential key points (3 corners) The fourth corner is predicted by assuming the four key points form a parallelogram (weak-perspective projection). A homography is computed to transform the paralellogram to a square (rectification) such that codes captured at different viewing angles can be matched directly. Both networks are trained with simulation data with physics-based imaging models.

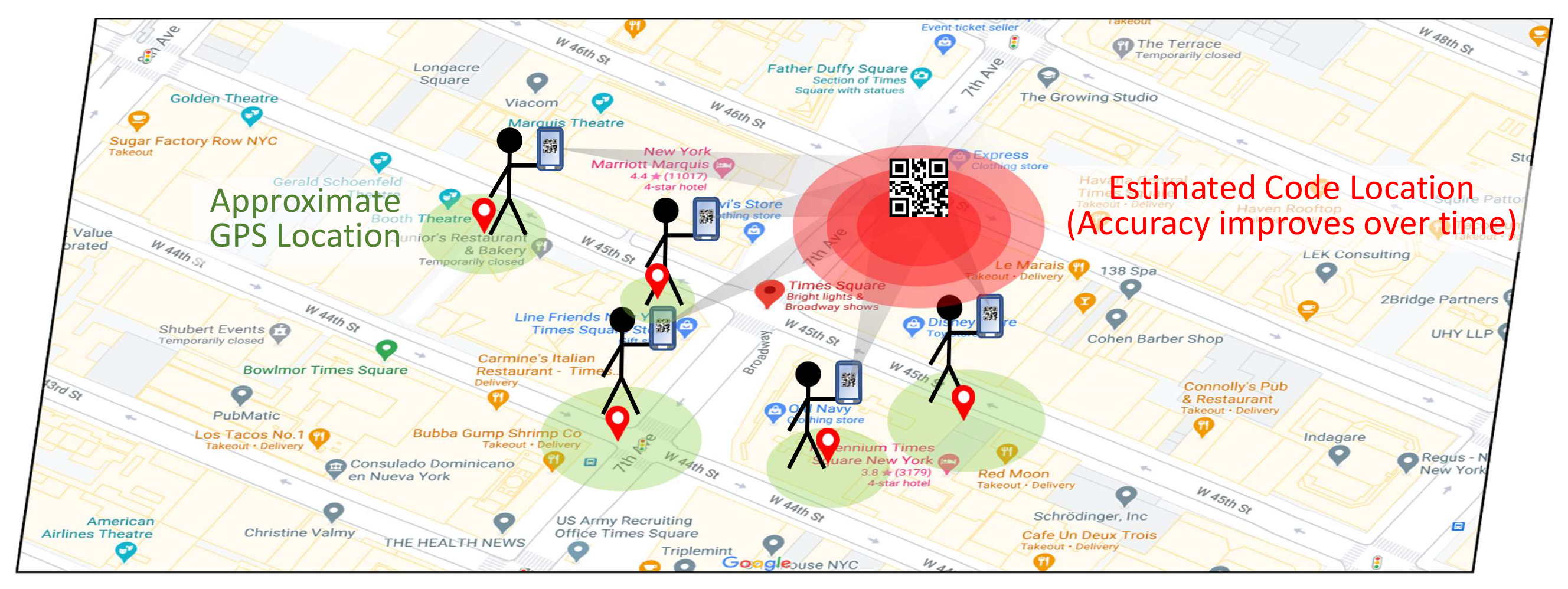

Instead of manually creating a spatially-indexed database of QR codes, we propose building the database through crowdsourcing. Every time a user use a conventional QR code scanner to scan a QR code, the approximate GPS location of the user is saved and registered to the code in the database. The GPS location of the code is then estimated by a weighted average of past user locations, whose accuracy improves over time as more and more users scan it.

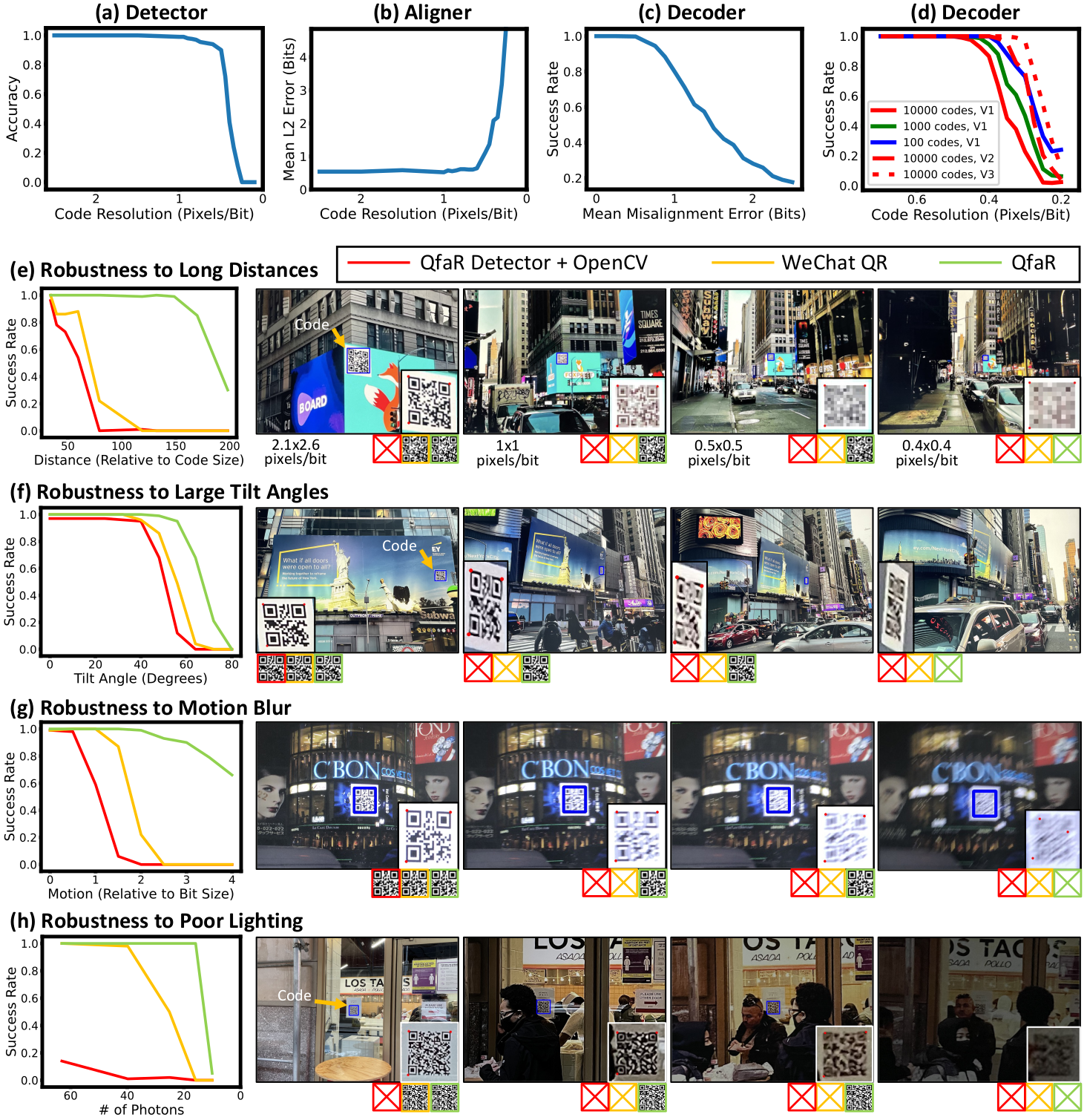

We evaluate each component of QfaR separately. We also compare QfaR with existing methods and show that it is much more robust to long distances, large tilt angles, motion blur and poor lighting.

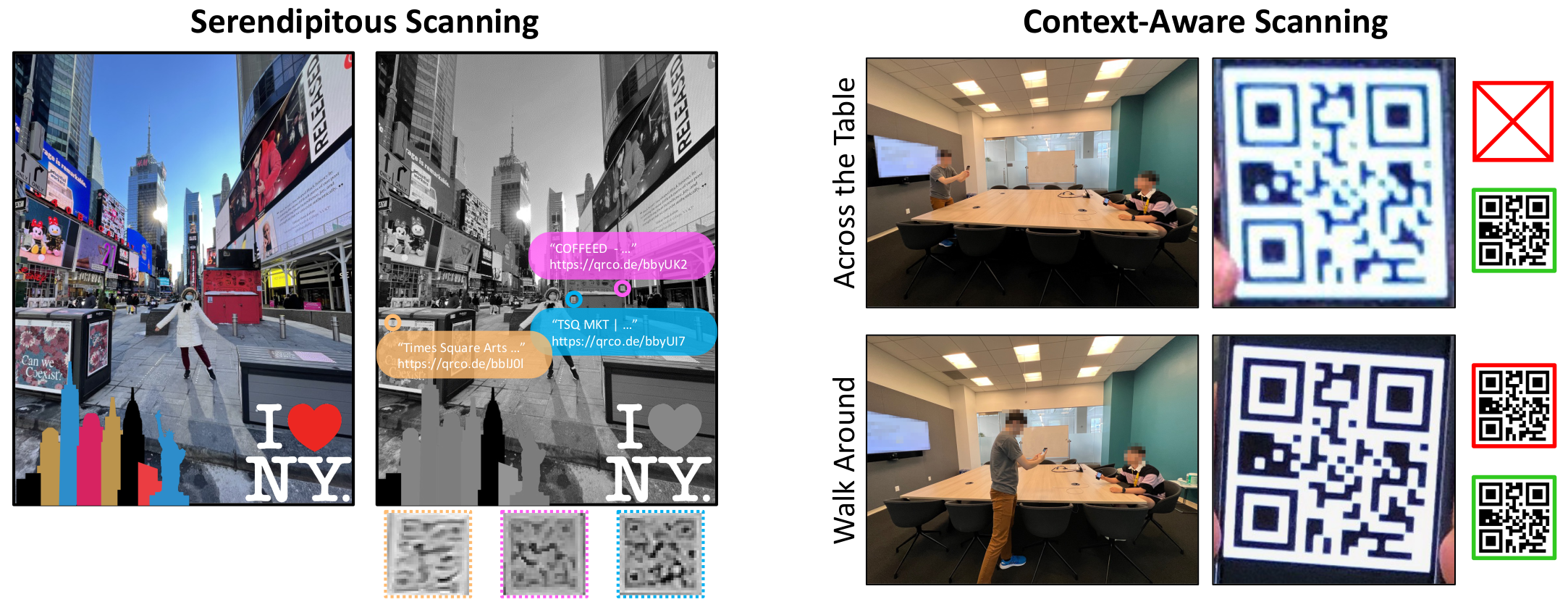

In addition to long-distance attentive scanning, QfaR can also be used for serendipitous scanning, which means that extremely small codes can be discovered as a surprise even though the user has no intent of scanning a code when using a camera app. The idea of pruning the code space also enables context-aware scanning, where the code space is pruned by other kind of contextual information. For example, it is possible to friend a person across a long table, where the code space is pruned down to people who is using the app right now. QfaR opens up a myriad of possibilities for unprecedented applications.

@inproceedings{ma2023qfar,

author = {Ma, Sizhuo and Wang, Jian and Chen, Wenzheng and Banerjee, Suman and Gupta, Mohit and Nayar, Shree},

title = {QfaR: Location-Guided Scanning of Visual Codes from Long Distances},

year = {2023},

booktitle = {Proceedings of the 29th Annual International Conference on Mobile Computing and Networking},

articleno = {4},

numpages = {14},

series = {MobiCom '23}

}